ChatGPT and search engines are made for traversing problem domains. Let's talk about that.

TL;DR - What ChatGPT and search engines are made for problem domain traversal? Yes, problem-domain traversal is practically just navigating knowledge to solve a problem. ChatGPT is the best at this because, in the same way as teachers do, it figures out what you know and builds a metaphorical knowledge bridge to get to what you want to know. It does this by understanding the context and conversing with you. In the end, you can solve problems that weren't in your area of understanding before.

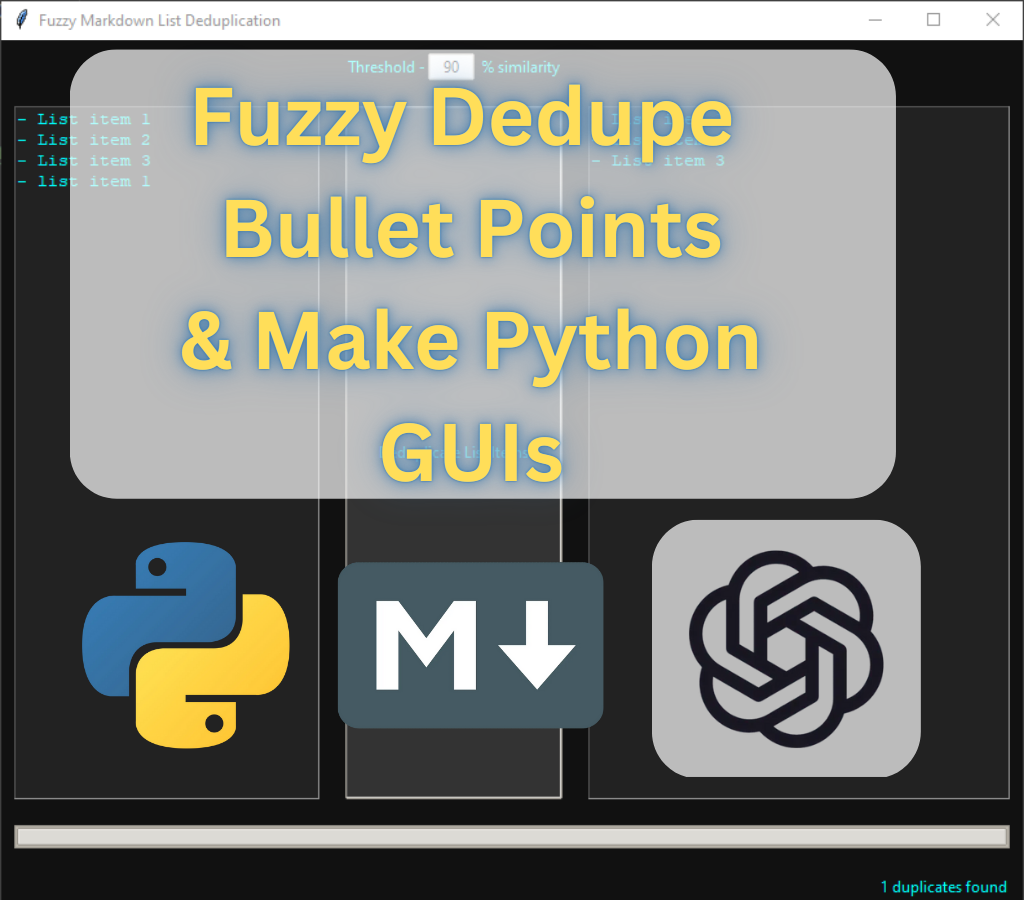

fuzzyListDedupeGUI

This blog post used to be part of a large blog post that involved a tool I created. Feel free to check it out too. It deletes "fuzzy" duplicated lines generated from ChatGPT😁.

https://blog.cybersader.com/fuzzy-deduplicate-bullet-points-from-chatgpt/

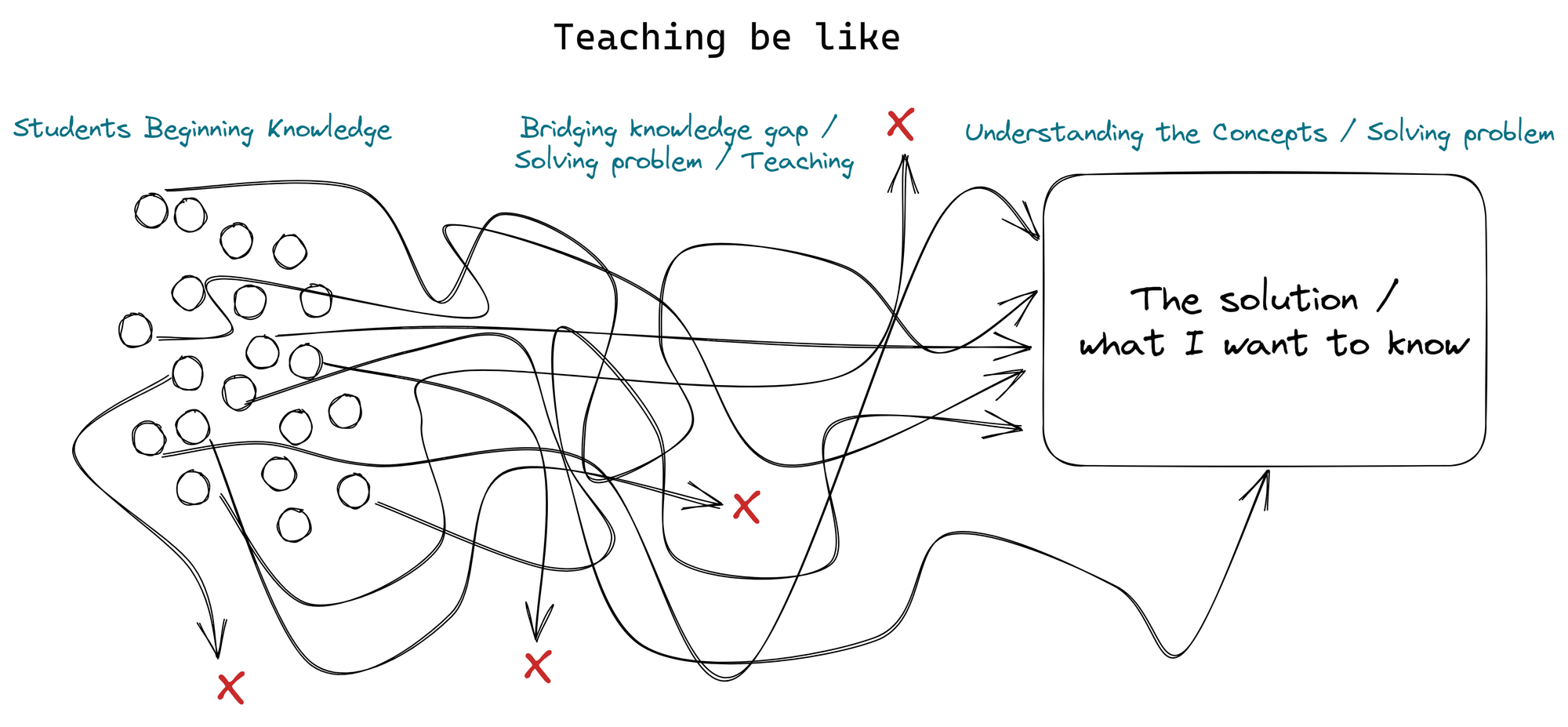

ChatGPT - not yet a search engine, but a great compliment

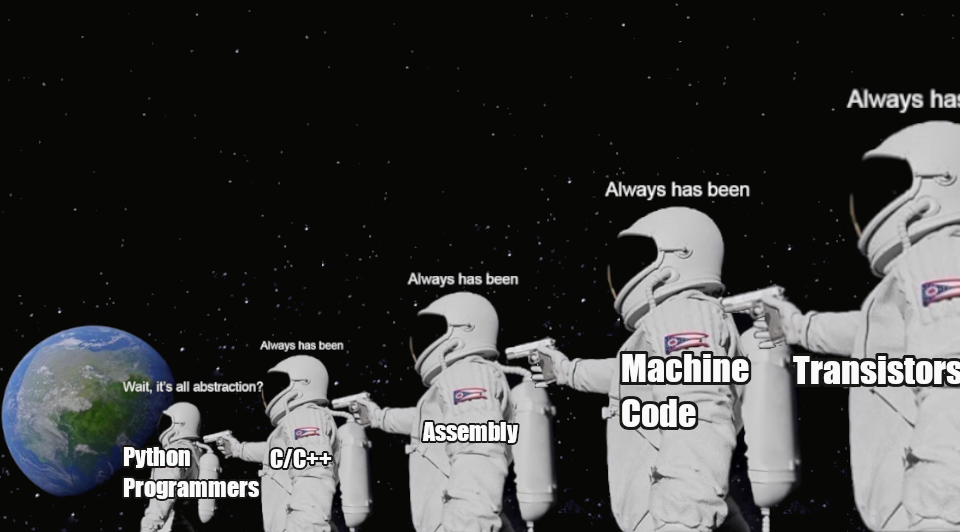

I'm coming from the perspective of what some would call "knowledge engineering." When it comes down to it, all of this can be related to AI training or compared to training children. Our brains are much cooler than AI models, so I wouldn't really compare them. However, the fact is that any brain or model starts out somewhere on the left, and to get to the desired knowledge configuration (the right), one may need to cross into new territories and scope out the problem domains they're looking to traverse. To put it another way, when you learned multiplication you had to understand addition and subtraction first. If you don't bridge the gap slowly with various abstractions and concepts, then learning new things is pretty much impossible.

Search engines were just the beginning (building bridges without machines)

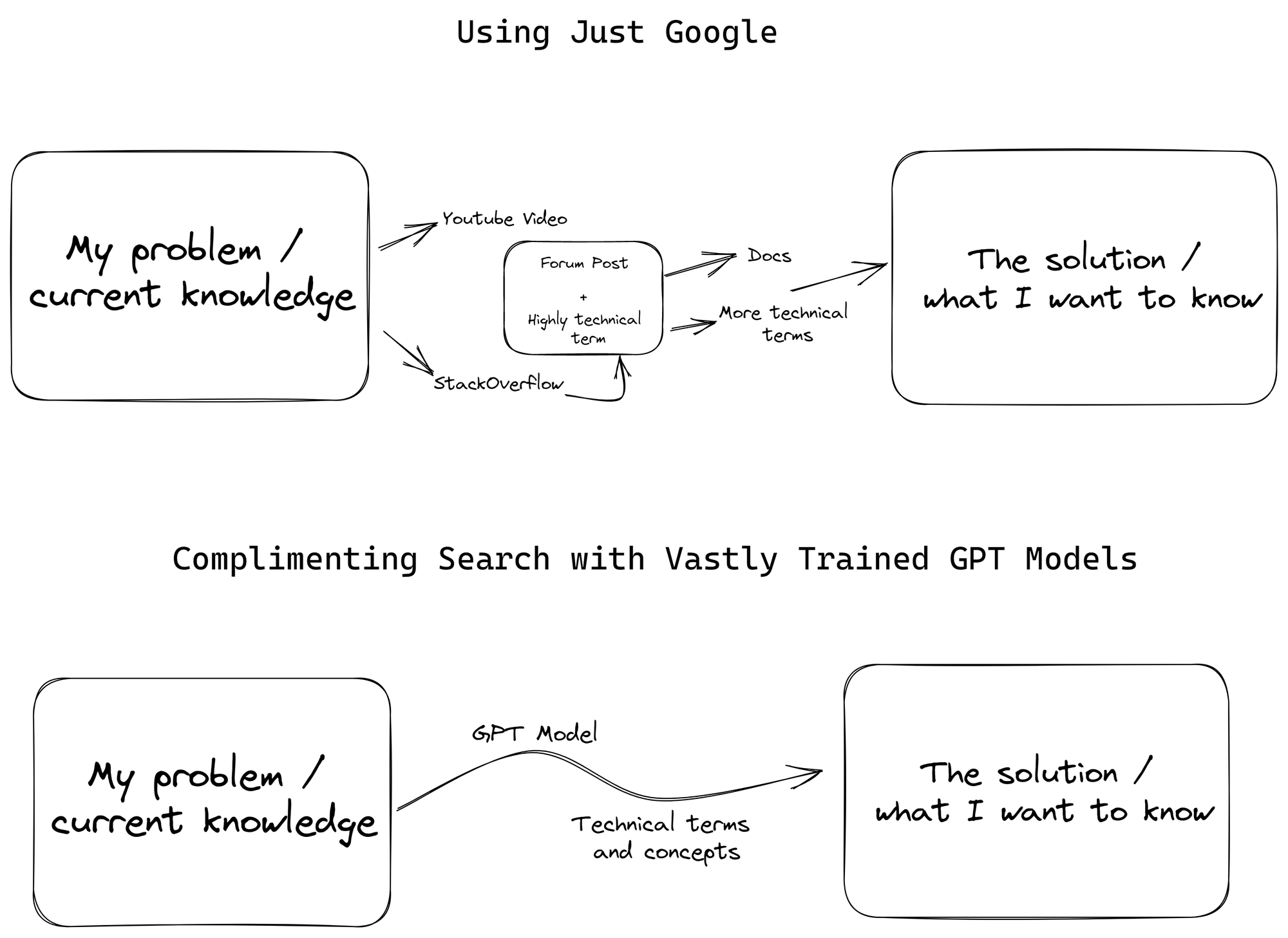

Search engines are a great starter for the internet. I don't think GPT models are going to "kill" search engines😆. You still need some synthetic ways to navigate the internet. However, search engines by themselves, have blatant limitations from the standpoint of knowledge communication. Namely, there are concepts or terms that are sometimes the tipping point for learning something new or solving a problem. Navigating the internet with a search engine means that you just happen to search the right terms to arrive at some set of knowledge that bridges your knowledge gaps, or someone else architects and builds enough bridges leading to that concept. These are the innovators of the world: the kind of people that simplify and market a solution or concept so well that it can be utilized and found by all sorts of "knowledge workers" (people using knowledge to solve problems).

AI changes how knowledge is obtained (automated bridge building)

ChatGPT isn't a new concept, but it is becoming more feasible, and the business model surrounding it is coming to fruition. Yes, it presents a lot of dilemmas, and legislation and oversight around AI, privacy, and model usage will be paramount in the next 5 years to prevent vast issues and conflicts from emerging. However, GPT models like ChatGPT are most definitely the next step to large-scale "knowledge bridging" if I want to make up a term. This concept also has a strong connection to knowledge graphs, which I believe will eventually become a crucial component in web crawling for the purpose of training and live search for chatbot AI models. In addition to that, ChatGPT serves as an automated bridge builder, connecting people with new areas of knowledge in an efficient and seamless manner.

Now - in a literal sense it's much more complicated than that - GPT is a very complex model that abstractly finishes inputs with creative outputs based on what it's been trained (look at Generative Pretrained Transformer). In this case, you give it the knowledge or proposed solution destination, and it builds a bridge to help you get there.

“Nothing vast enters the life of mortals without a curse.” - Sophocles

GPTs are to search engines what tractors were to hand plows. Just as tractors revolutionized farming by making it faster, more efficient, and more productive, GPTs are revolutionizing information retrieval and epistemology (the study of knowledge and how we come to know things) by making it faster, more efficient, and more productive.

Teaching is HARD & Knowledge Engineering

Information Retrieval - the process of finding bridges to the desired knowledge configuration, understanding, or solution. Then, one must cross them.

Epistemology - the science of knowledge and obtaining it.

Knowledge Engineering - the methods for constructing bridges to traverse the landscape.

Ontology - the science of how we view reality...important in this realm of study. An ontology can also be the architecture or map of a specific problem domain, in which the "bridges" are constructed or obtained from IR (information retrieval). Ontology is the landscape of specific problems or knowledge.

Ontology Traversal - this encompasses how we navigate ontologies/problem domains.

Teachers have the specific job of bridging knowledge gaps with students, and their jobs are even more difficult because they have to define a curriculum or teaching strategy that concludes with all students at the "what you want them to know" area. The problem is, that every student has distinctive knowledge configurations at the start. They all need slightly different bridges or routes to get to the "solution" area, and yet somehow the teacher has to create a bridge that will work for the majority or somehow build detours for various clumps of students. Not to mention, you have to constantly reevaluate students' abilities and knowledge configurations, and keep them remotely interested in doing the work to get to that point of understanding (@ my fiance - she's a teacher🤣).

Figuring out the students' beginning knowledge configurations (the left side) is also not as easy as analyzing a GPT model. Human inputs and outputs are ludicrously slow (in terms of communication) when compared to GPT models. This is the reason for ideas like Neuralink. Teachers can't simply write a program to test understanding across 30 students. They have to make quick assumptions and design assessments to manually gauge this understanding. This relates closely to core issues and controversy around standardized testing. The one thing humans have on these GPT models though is that we don't need that many inputs to generate some really good outputs or to build some good "knowledge configurations." In reality, we are much more efficient than GPT models when it comes to training, which is why Q&A formats and discussion is SUPER IMPORTANT. Not asking questions in class is equivalent to throwing away 1000s of dollars every semester.

3 things to remember:

- ⭐ Asking questions is essential to overall knowledge increase in society ⭐

- 🧐 If you can't say it without technical jargon, then maybe you don't understand it enough 😬

- Ontology traversal is how we move through new problem domains. Systems like search engines and ChatGPT make it easier to do so.

Skill Networks in Cybersecurity

The idea of "skill stacks" expressed in the Cyberspatial video (below) accentuates the issue in a different way. From the perspective of skills rather than the "knowledge config" of your brain. This is more related to progressing in skills, but still really valuable.

Topic Expansion - "Ontology Recon"

This would be the step that comes before ontology or problem domain traversal. This is the reconnaissance step or exploration step that one can use to map out the domains first. Then, they can begin building bridges to those new domains or terminologies and begin to gain knowledge and concepts of them.

As shown in previous sections, ChatGPT is really good at bridging understanding gaps and, in turn, helping us solve problems. The thing that I really love to do is generate taxonomies, ontologies, or curations of specific topics. This helps me to figure out what I can begin searching on the internet to help me solve a particular problem.

As stated before, sometimes people will create knowledge on the internet or other communication mediums, in which there is a "knowledge bridge" for me to cross over to a new level of understanding. Sometimes the bridge crosses over technical terms or avoids certain abstract concepts. For example, I may find an article about cybersecurity that shows me how to configure my own web server using NGINX. However, the article is marketed as "set up your own website for cheap" rather than "setting up NGINX in Azure Cloud" or something too technical that I would never make a search query for on Google.

ChatGPT uses context clues and the way I speak to abstractly construct a knowledge or understanding bridge for me. This is how people and teachers sort of work. Therefore, ChatGPT is really good for trying to explore new expanses of topics to which you don't have any sort of connection or have no way of knowing how to get there. You may have a problem you need to solve, but have no clue that there's a whole genre dedicated to solving those particular problems simply because you don't know the terminology.

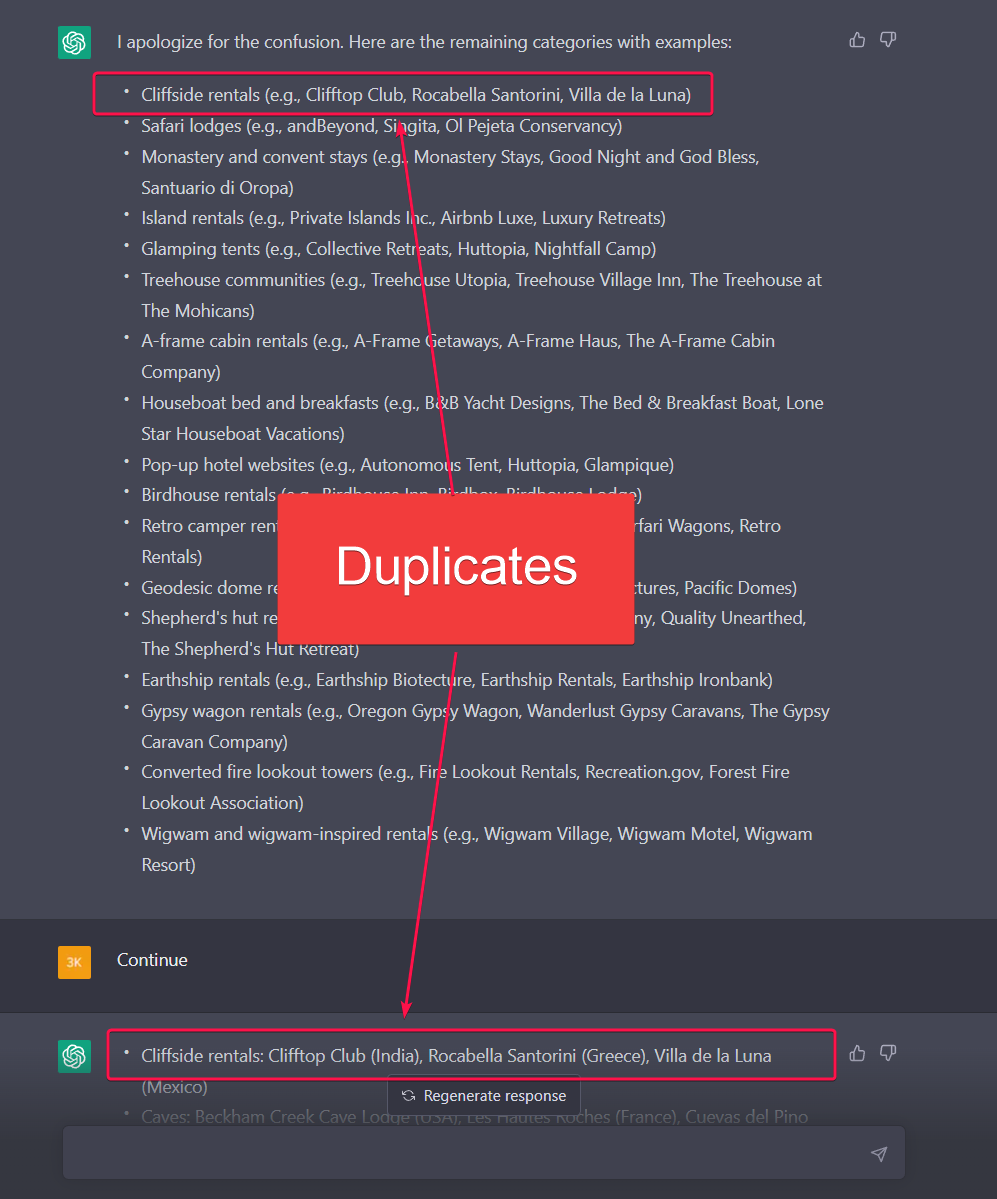

"Continue" and duplicated list items in ChatGPT

I use my tool above to fix the below problem.

If you've used ChatGPT yet, then you've noticed that it has response limits and that you need to say something like "Continue" or "keep going" to have it finish some of its responses. Sometimes it generates duplicates during this.

Here is an example of me looking for categories of places to stay for a honeymoon, and it generates a duplicate for "Cliffside rentals." This is where the tool I made can come in handy when you are trying to curate something for a particular topic that has lots of generated items in a list.

Using ChatGPT to help you code

ChatGPT is not a developer "killer" either. In fact, GPT gets a lot of things wrong, but there are obvious reasons for that. However, it is great for streamlining your process, generating boilerplate code, or in general, learning another abstraction in programming when you've already learned so many.

I used ChatGPT in several ways when I was coding:

- Explain potential solutions for weird errors

- Generate boilerplate or a layout for the code

- Help me design certain functions

- Help me with syntax from Tkinter

A lot of the time, I can explain something back to ChatGPT which is great for: 1) making sure I understand the topic and 2) recorrecting the model to help me more.

You still have to understand coding concepts and general debugging to use it. Most of the time I was using it to get a general layout, then I would tweak the code. Better than trying to go through StackOverflow posts which can sometimes be full of pretentious, impatient, and toxic developers...only sometimes, but enough to make you cry when your post gets downvoted into oblivion.

Conclusion

GPT models and search engines are a great start to improving the overall ability of people to problem solve. In other words, problem-domain traversal has been revolutionized with these technologies because our ability to communicate and bridge knowledge gaps has been enabled with these technologies.

If we are to keep improving though we need some things to happen:

- Privacy and AI legislation

- Better systems for creating specialized models or training workflows for personal ontology traversal / ChatGPT models

- Passionate people to push for innovative solutions